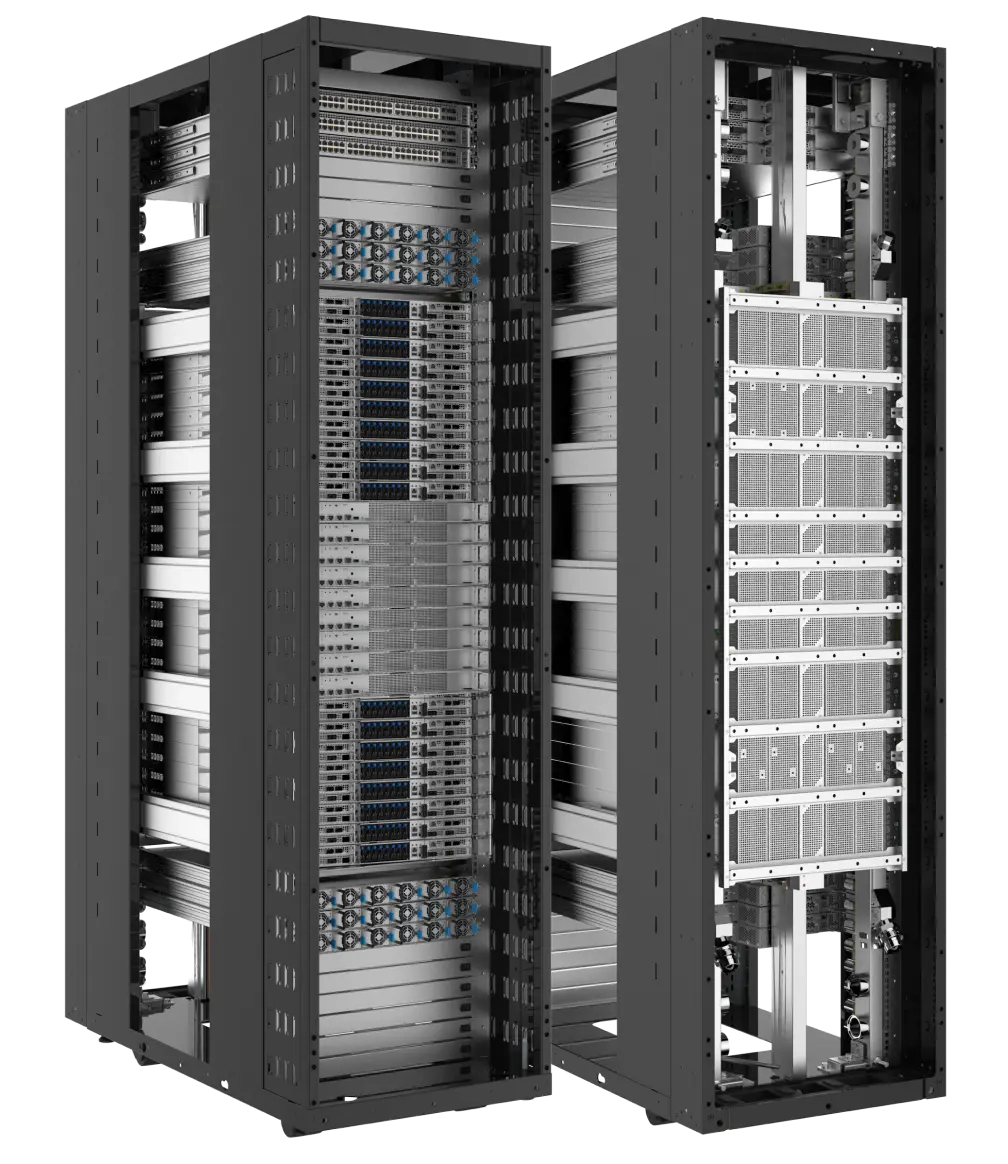

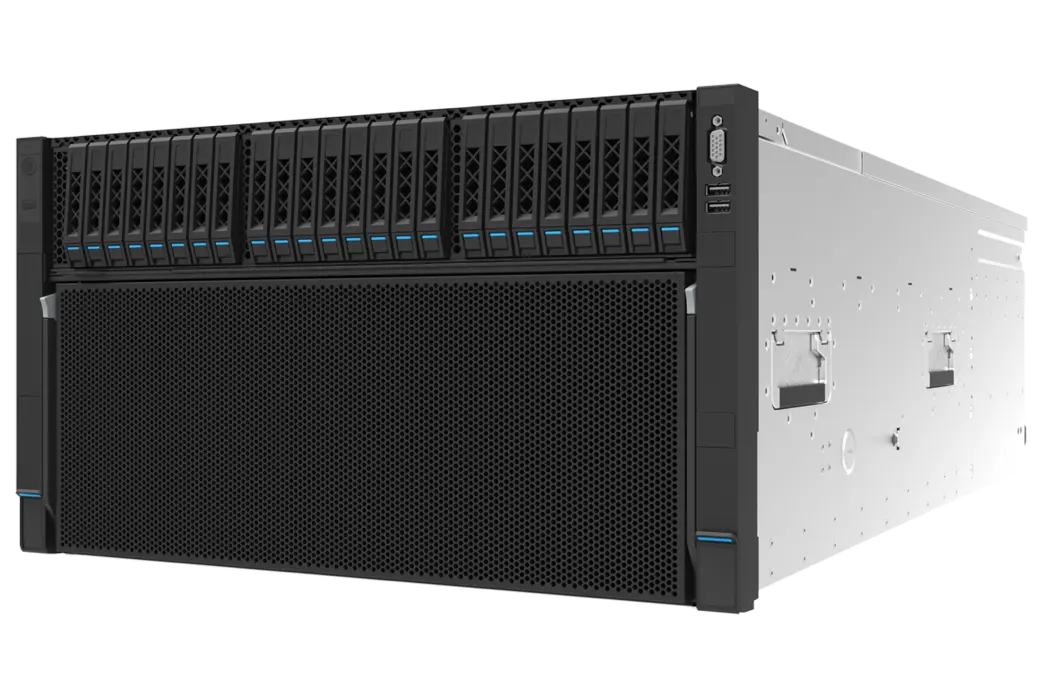

AI Rack Solution based on NVIDIA GB200 NVL72

KRS8000

Exascale Rack for Trillion-Parameter LLM Inference

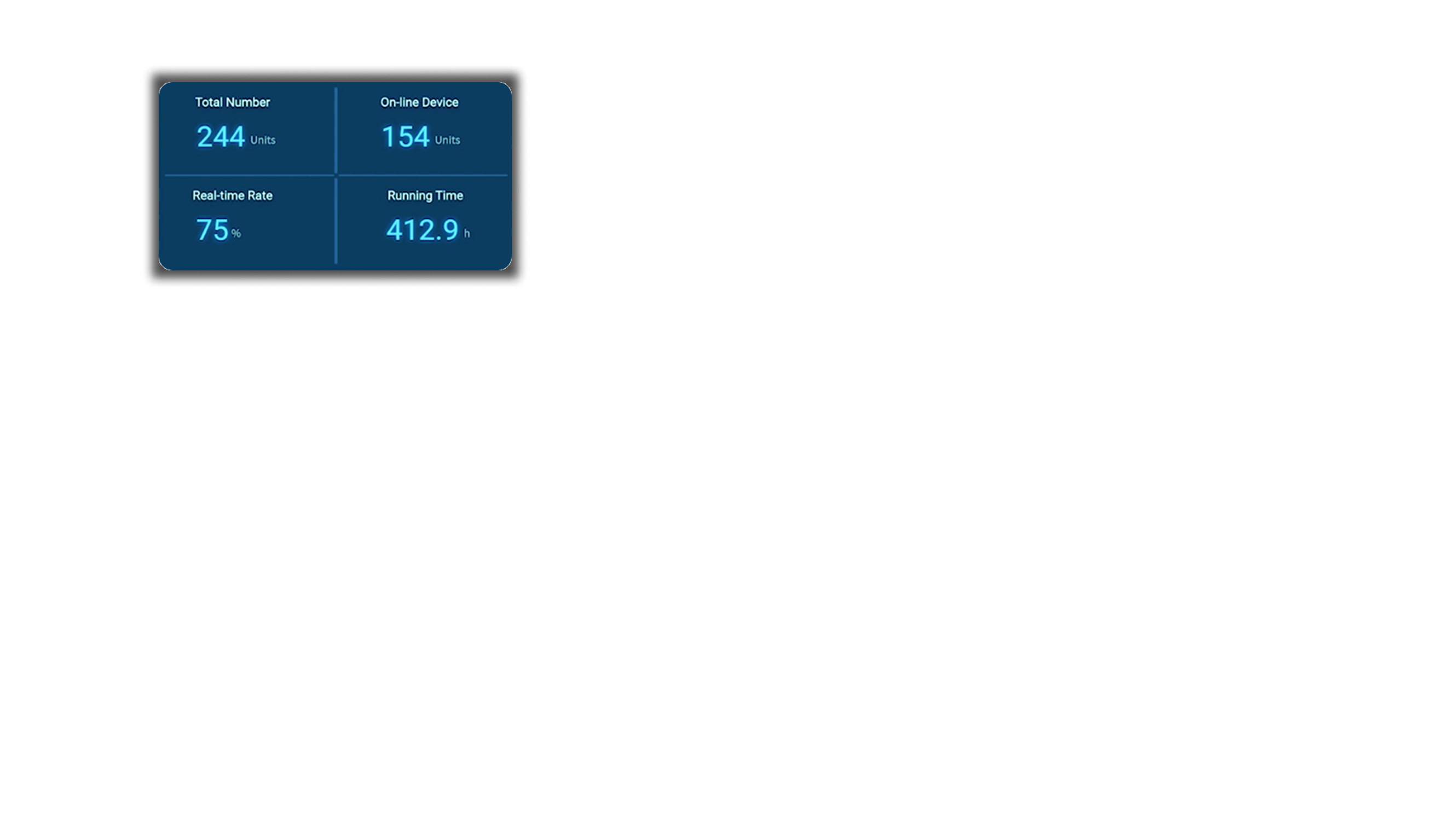

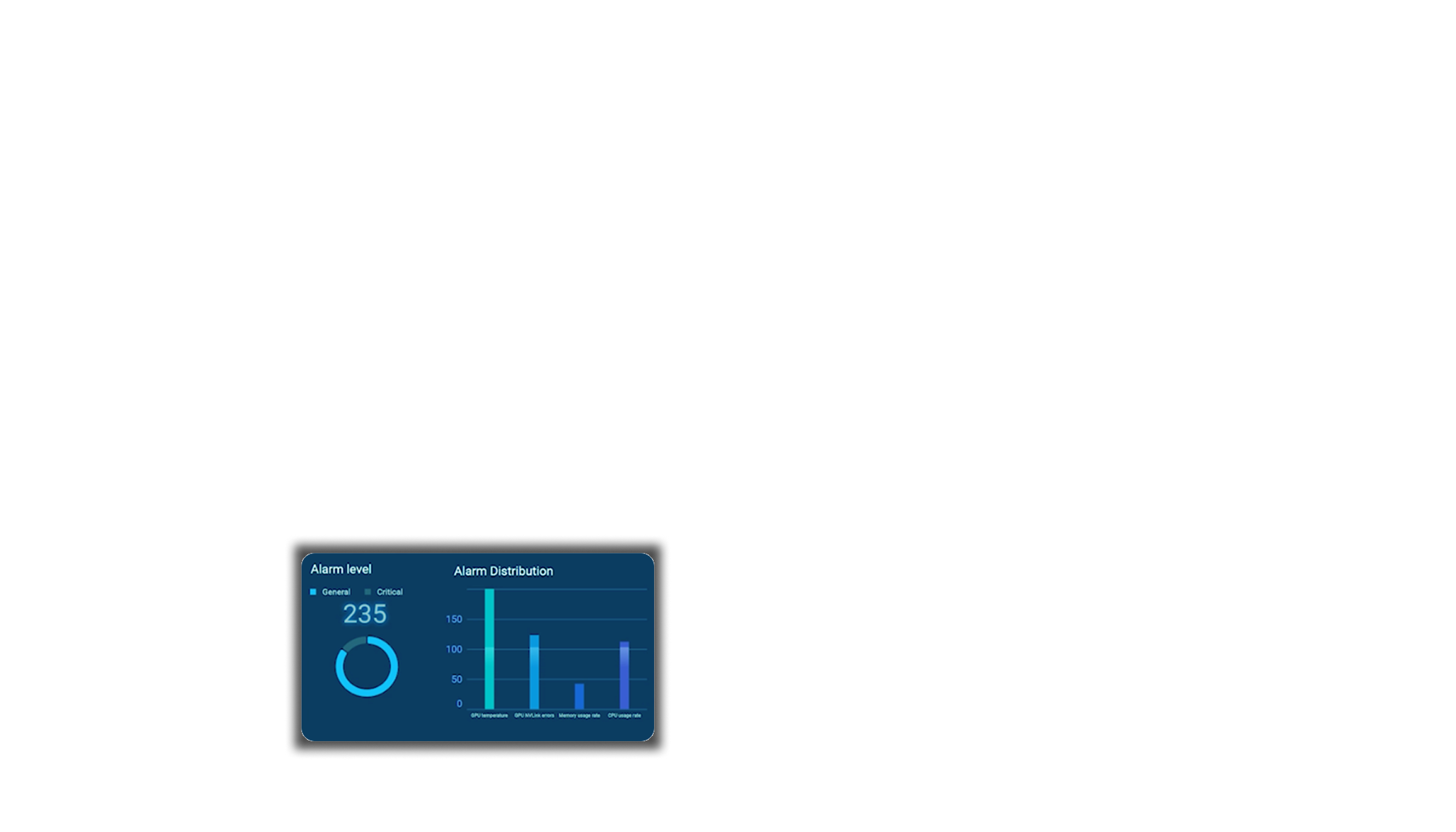

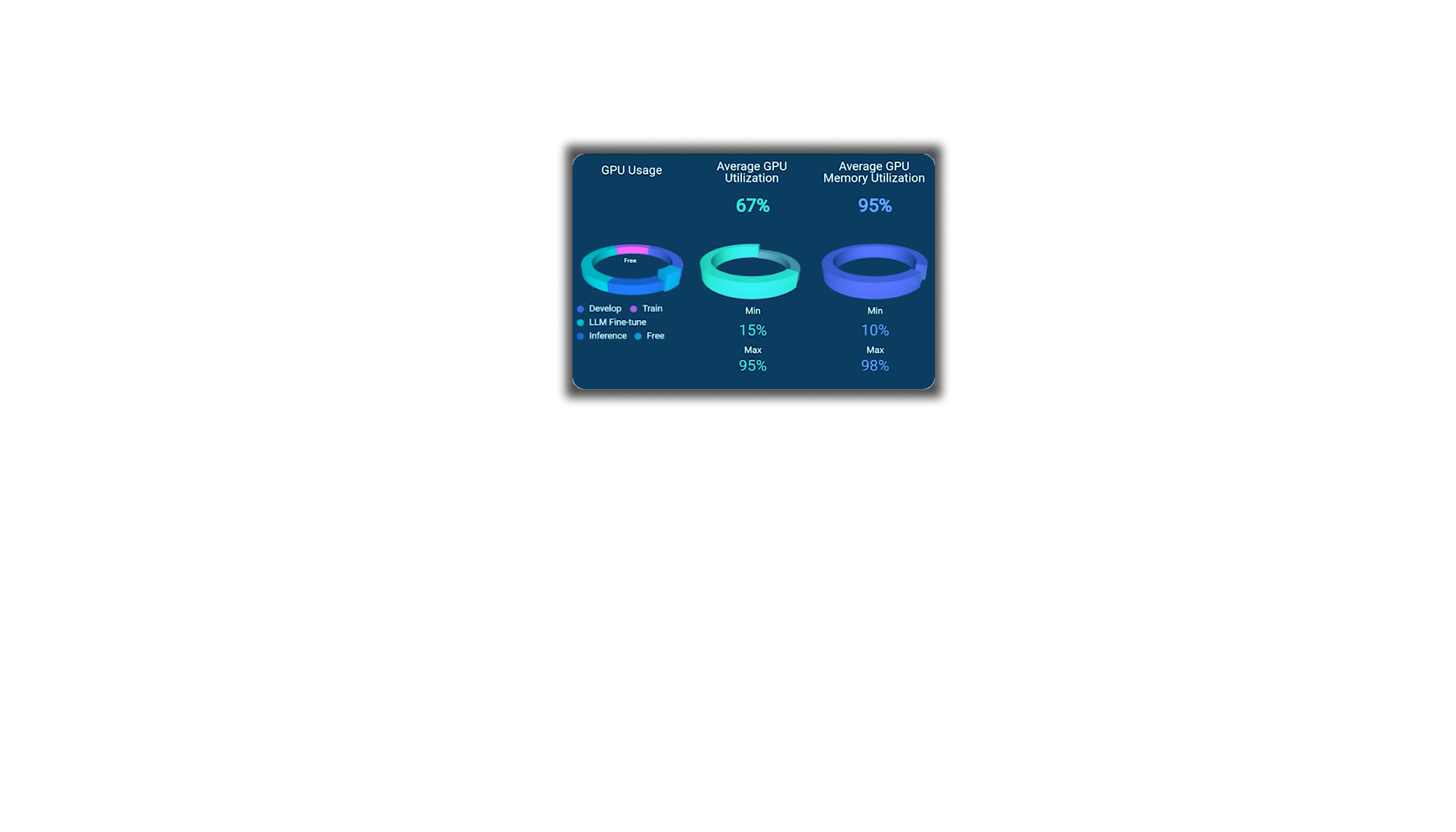

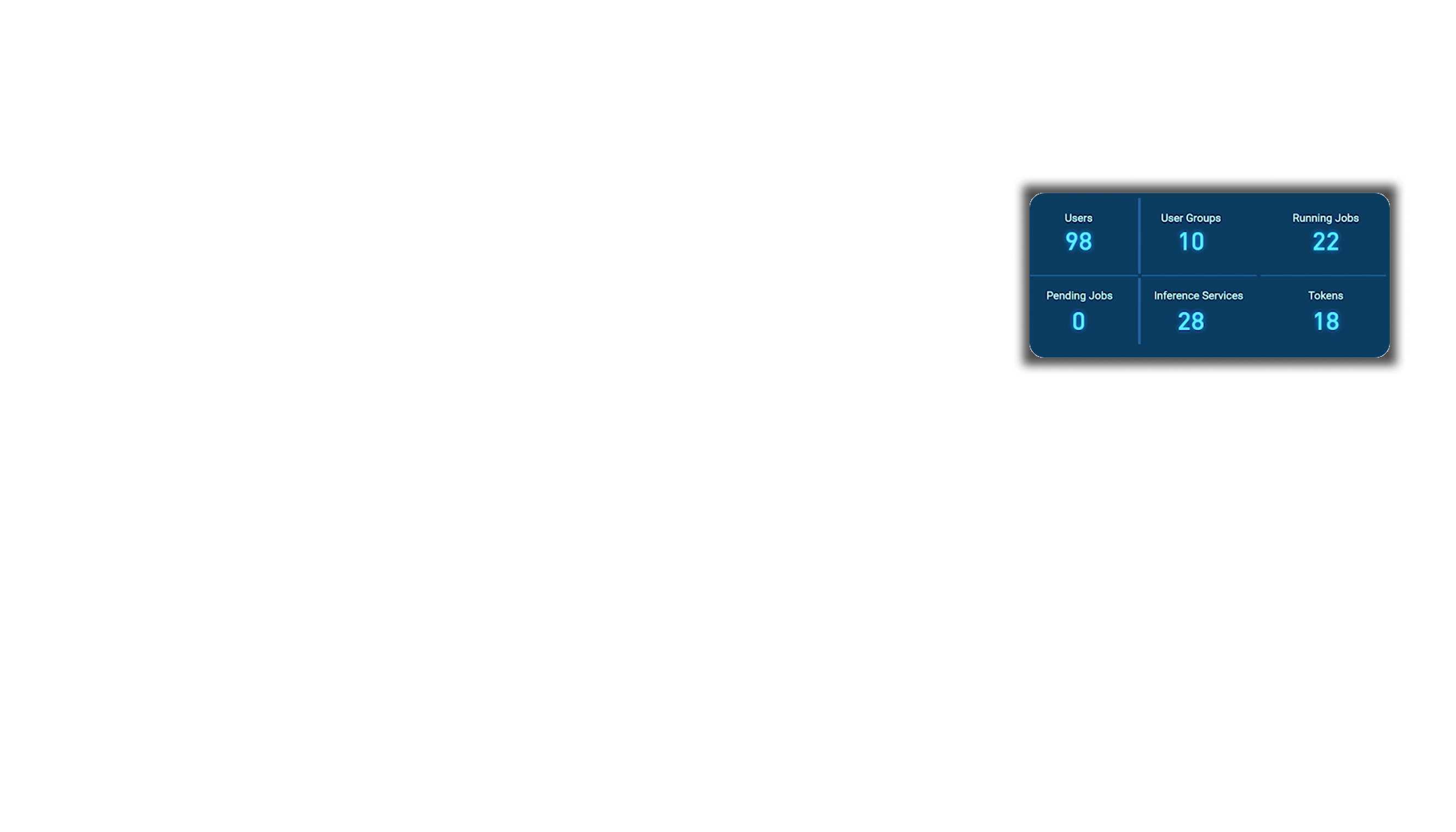

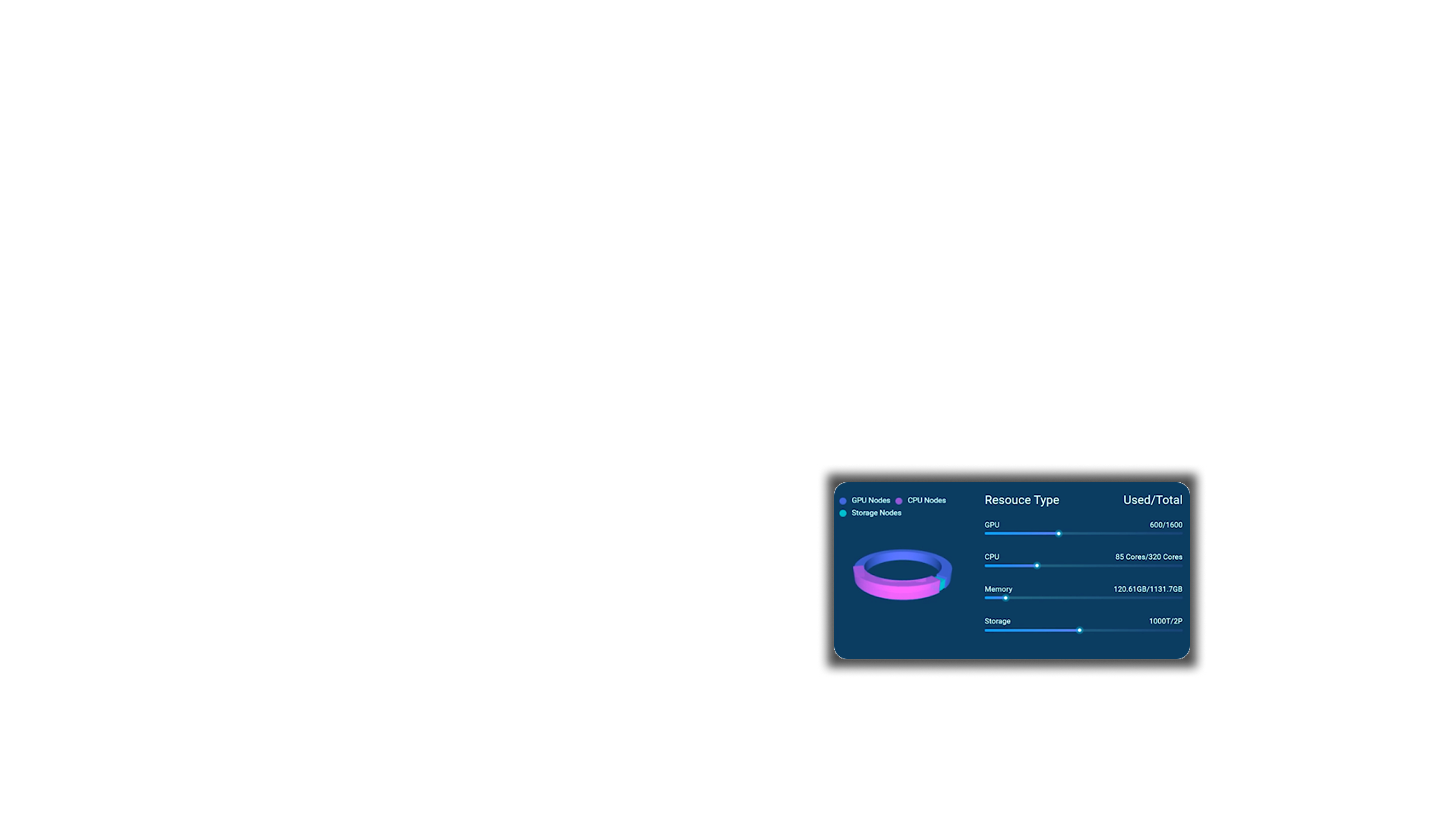

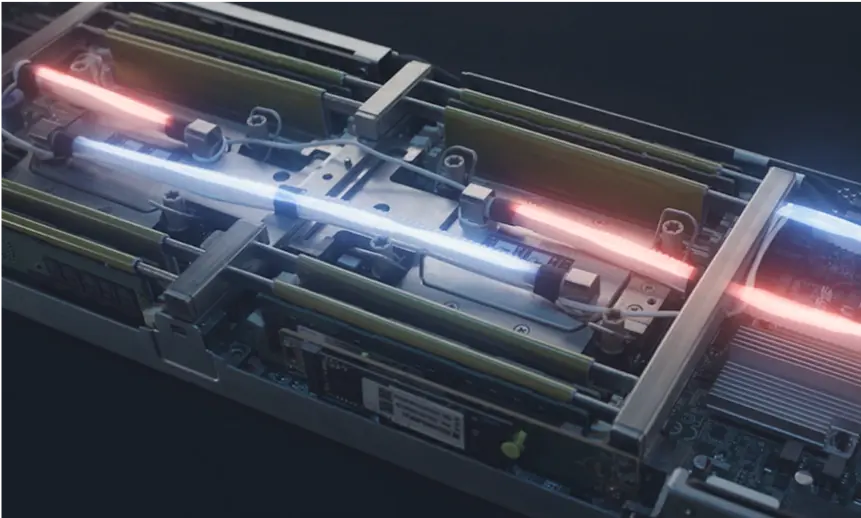

Based on the NVIDIA Blackwell rack-scale architecture, KRS8000 connects 72 Blackwell GPUs and 36 NVIDIA™ Grace CPUs via NVIDIA® NVLink™ that acts as a single massive GPU delivering 130 TB/s of low-latency bandwidth and 8 TB/s high memory bandwidth to achieve 30X faster real-time trillion-parameter LLM inference.