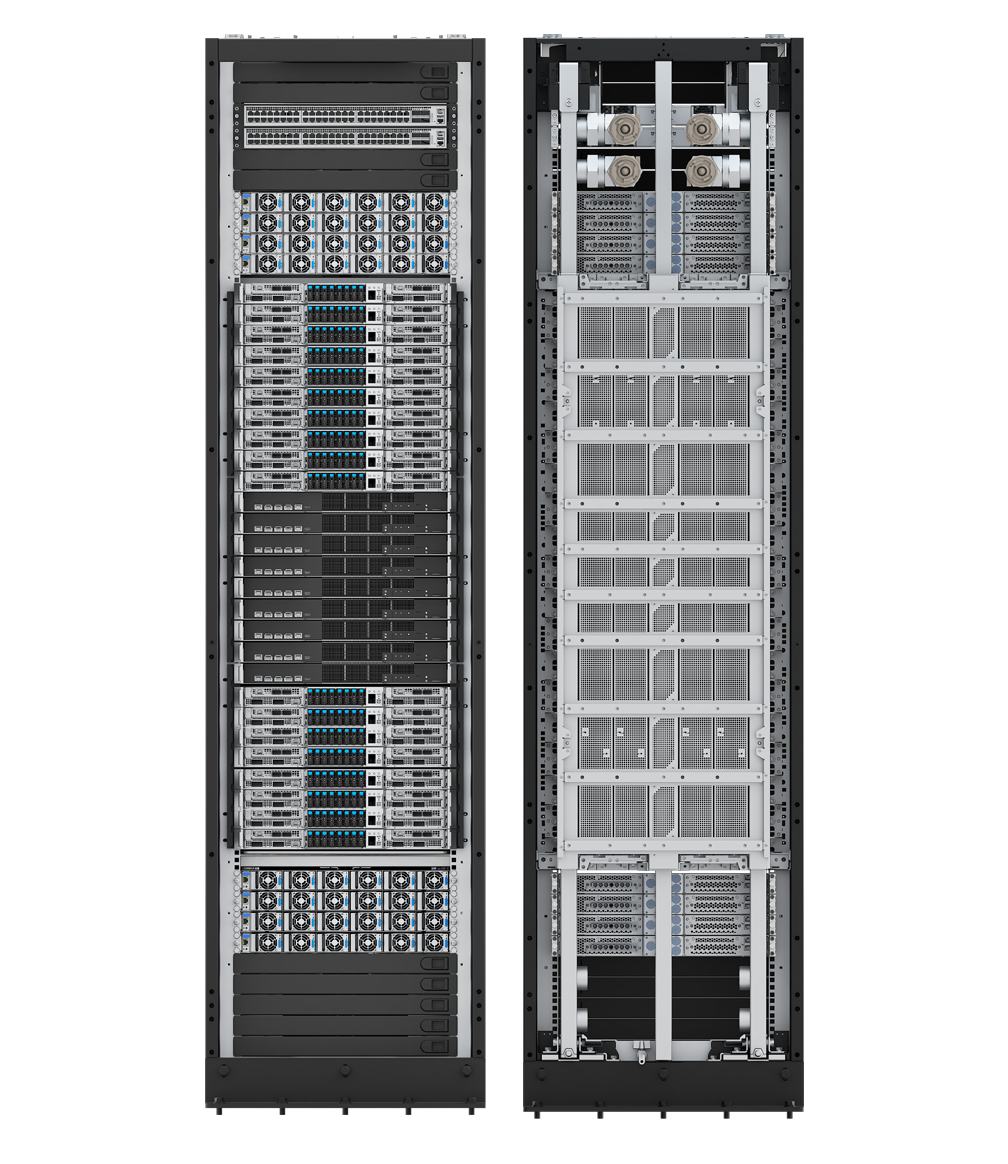

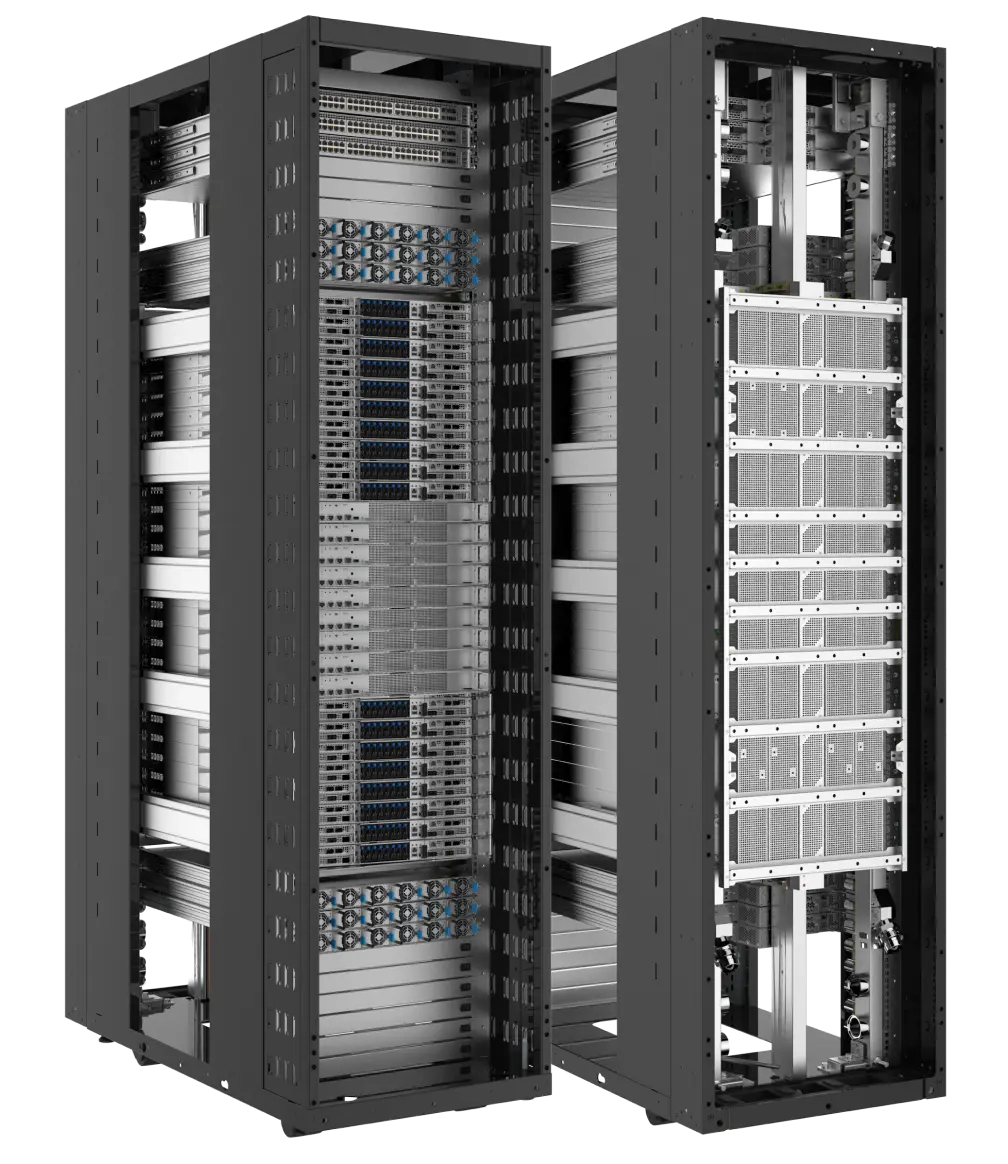

NVIDIA GB300 NVL72 Liquid Cooled Rack

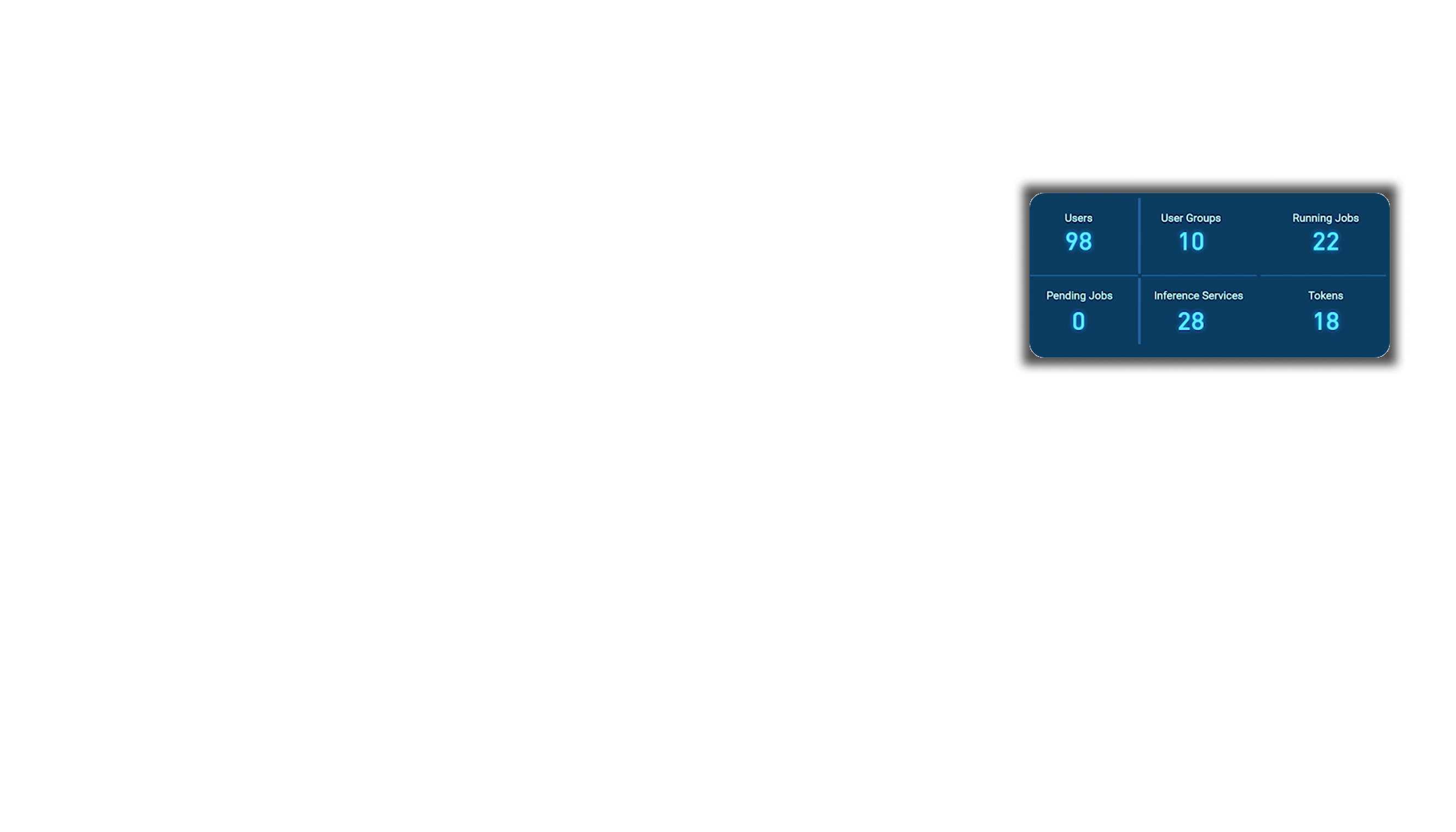

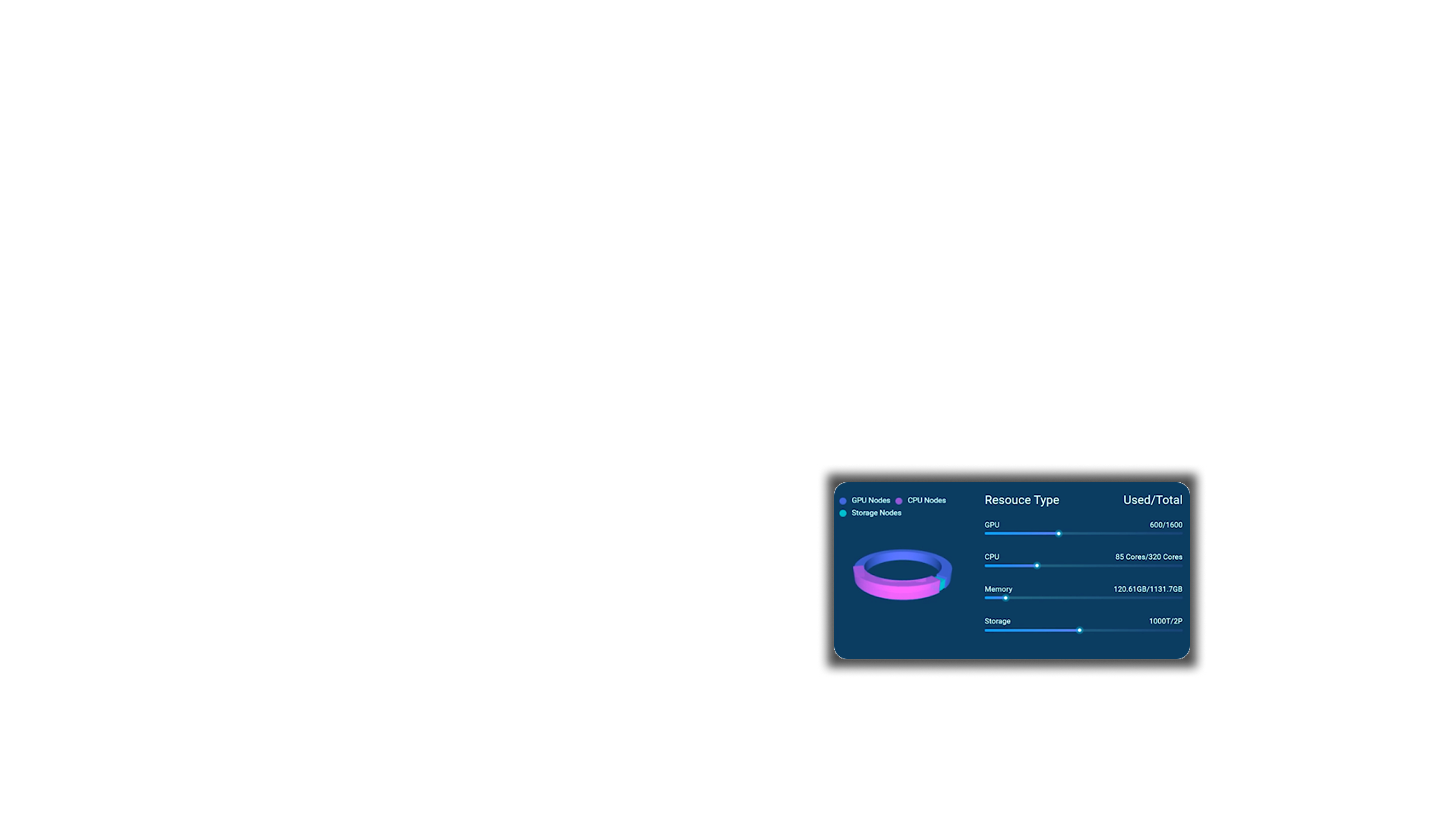

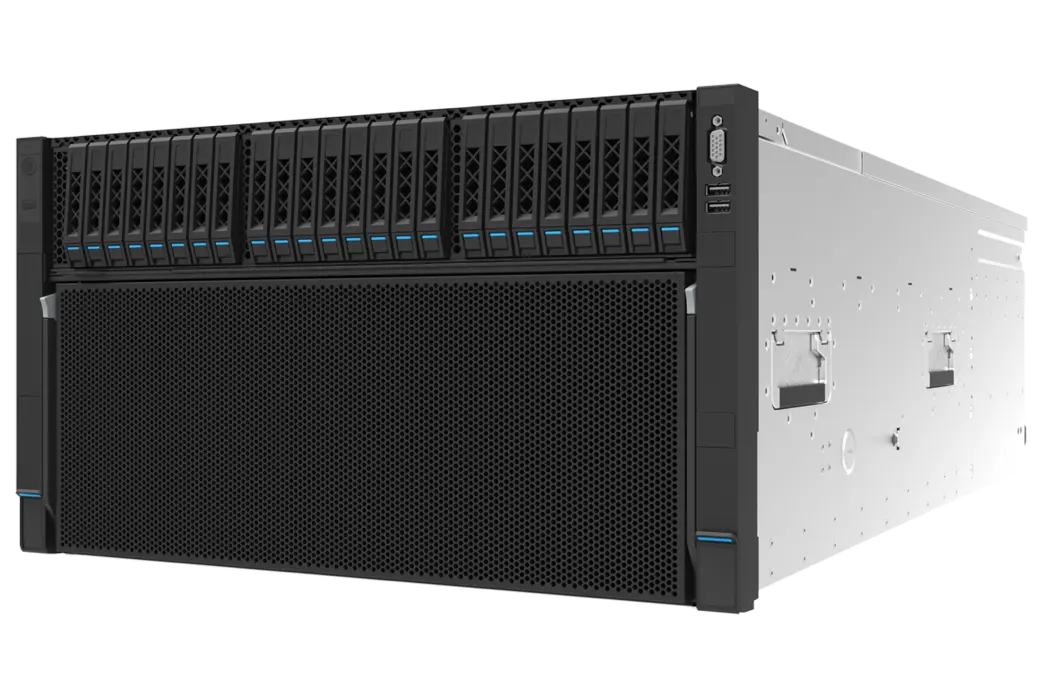

KRS8500

Exascale Performance for AI Reasoning

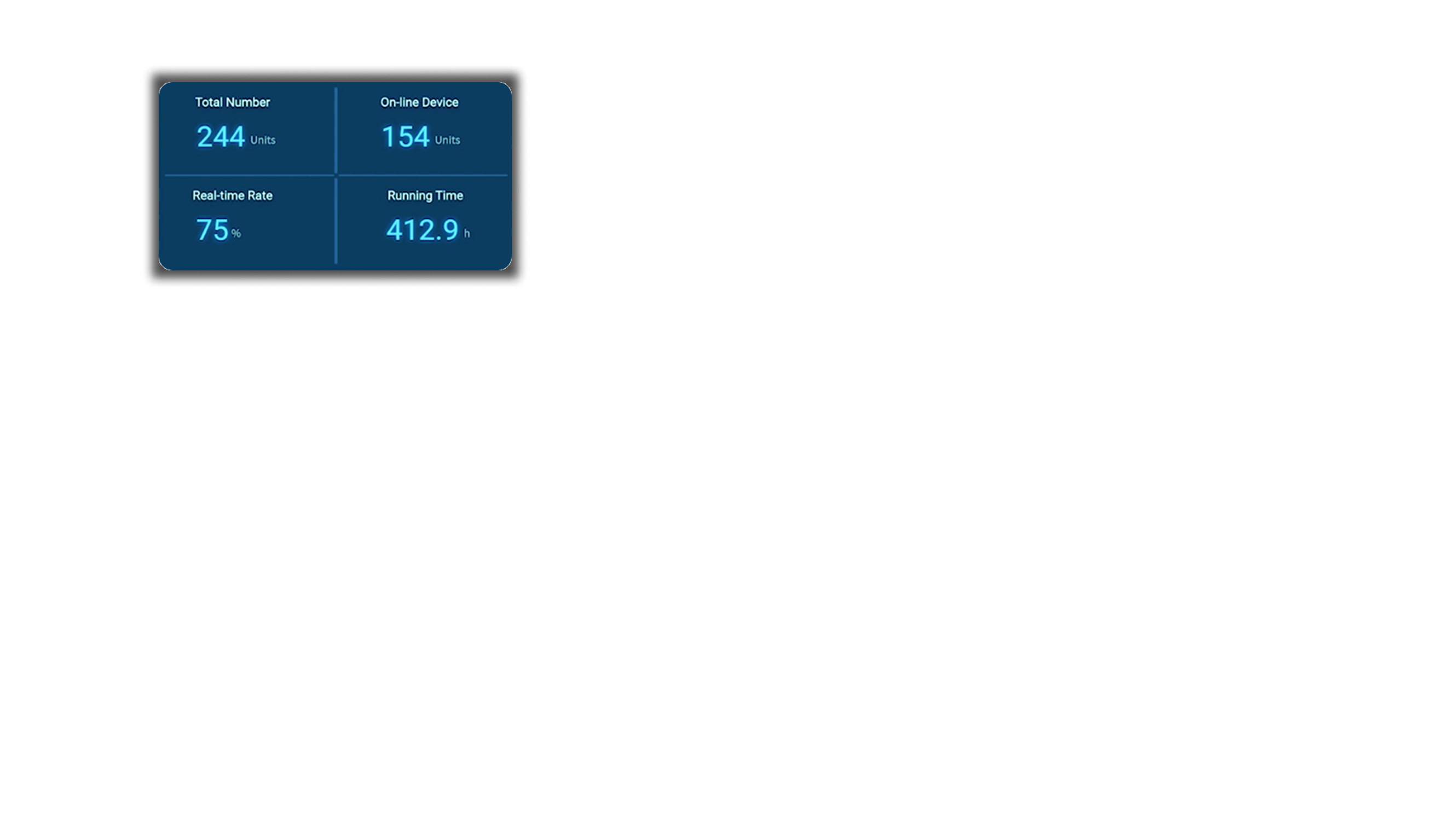

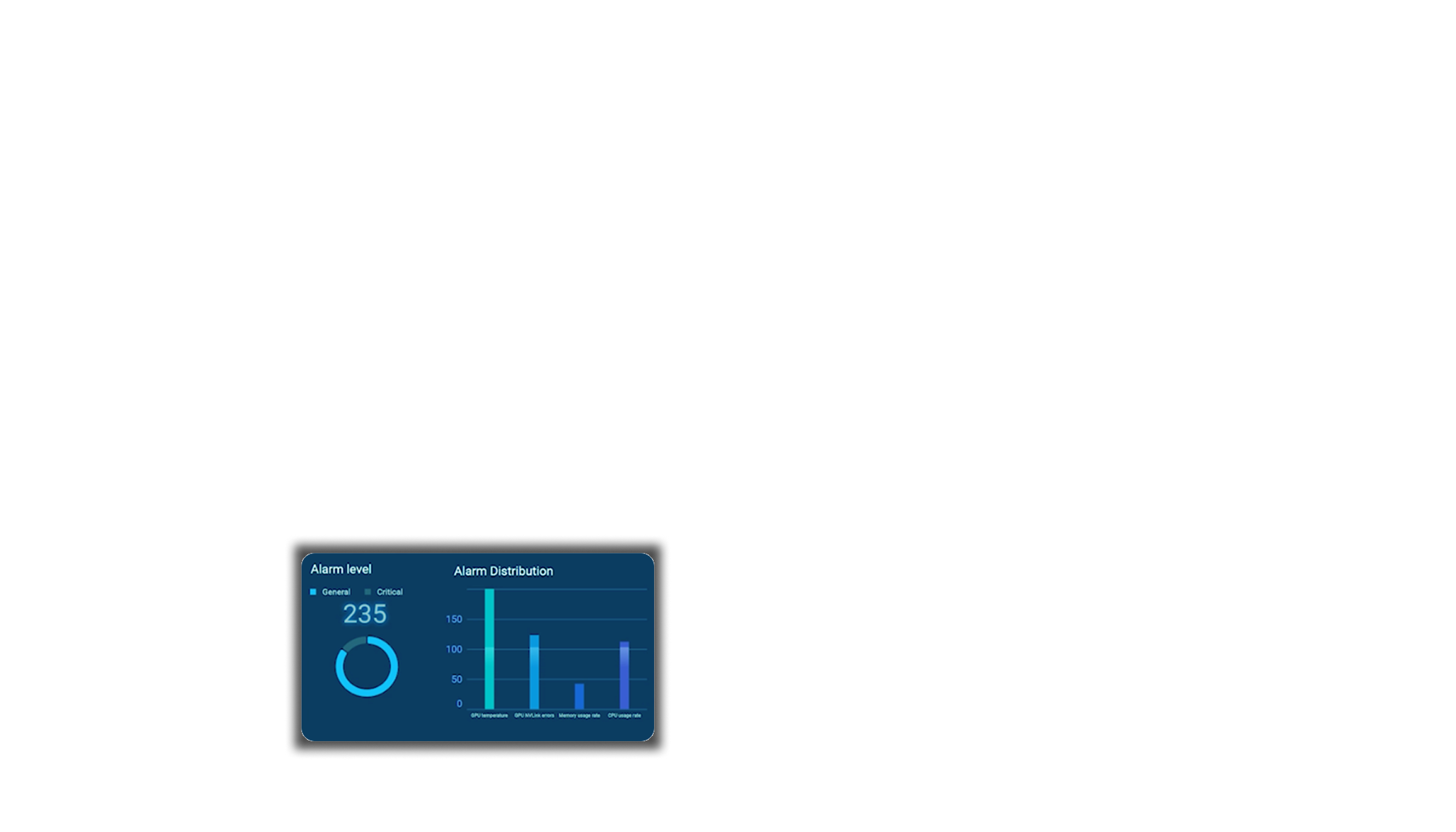

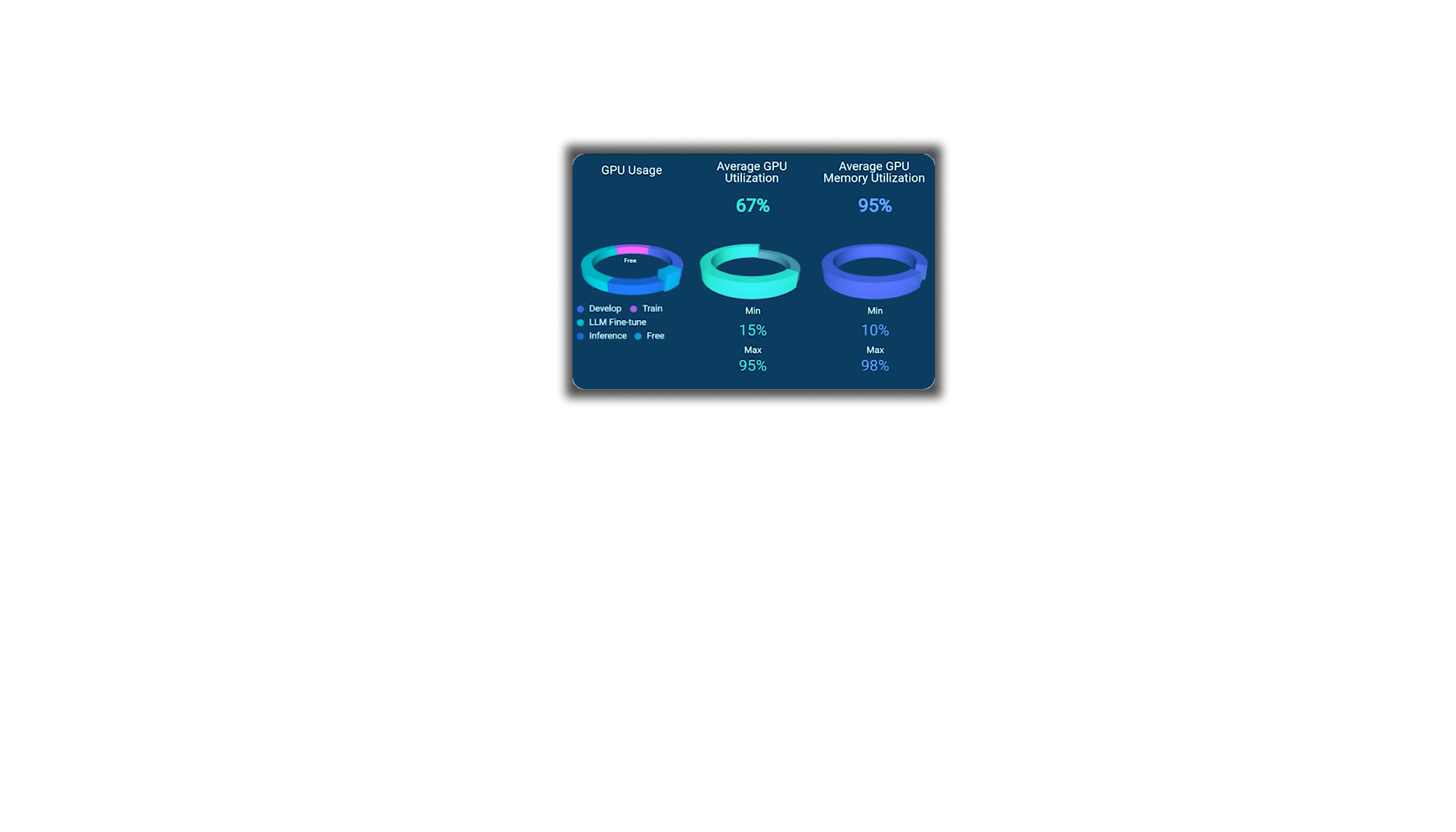

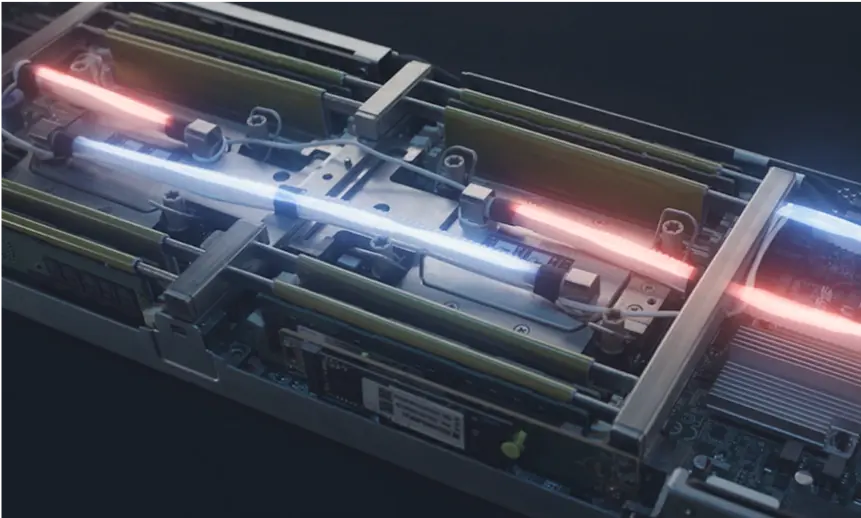

Based on the NVIDIA Blackwell Ultra architecture, Aivres KRS8500 integrates 72 NVIDIA Blackwell Ultra GPUs and 36 NVIDIA Grace™ CPUs, with 288GB HBM3e memory per GPU and 1.8TB/s NVLink interconnects to deliver exascale computing in a single rack. With its advanced direct liquid cooling design, KRS8500 delivers extreme high AI performance and energy efficient compute density for AI data centers.