Unleash the transformative possibilities of AI.

With our unique capabilities in deep learning architectures, advanced hardware design, and infrastructure expertise, we create innovative end-to-end AI solutions that help customers leverage the power of AI compute and tackle high performance workloads in the data center.

AI Unleashed with Aivres + NVIDIA

Aivres and NVIDIA are working together to develop solutions to help businesses harness the power of AI, through integration with NVIDIA’s edge AI technology on advanced Aivres server platforms, to support the most challenging AI workloads in the industry today.

Leadership AI Performance: AMD GPU Servers

Aivres AI systems powered by AMD Instinct™ GPU accelerators, now including the latest MI350 series, set new standards for exascale AI and HPC infrastructure in the data center, delivering scalable, ready-to-deploy systems for the largest AI models.

Our experience in large models lets us design advanced solutions tailored to clients’ AI projects, helping them maximize training performance and speed up development.

Aivres turnkey solutions are meticulously optimized to support scenarios of every type and scale, from enterprise applications to large training models.

Integrating the latest accelerator technologies, Aivres platforms deliver breakthrough performance, density, stability, efficiency, and flexibility for AI.

Aivres AI Solutions

Supercharge your high performance workloads with platforms and solutions tailored to a broad spectrum of applications and scales of deployment – from generative AI and real-time graphics to large model development and hyperscale AI factories.

Supporting Workloads at Any Scale

Massive deployments like AI factories, cloud service providers, large language models

Modular architecture to support model training and inference workloads as needed

Versatile building blocks for generative AI, graphics and video processing, visualization

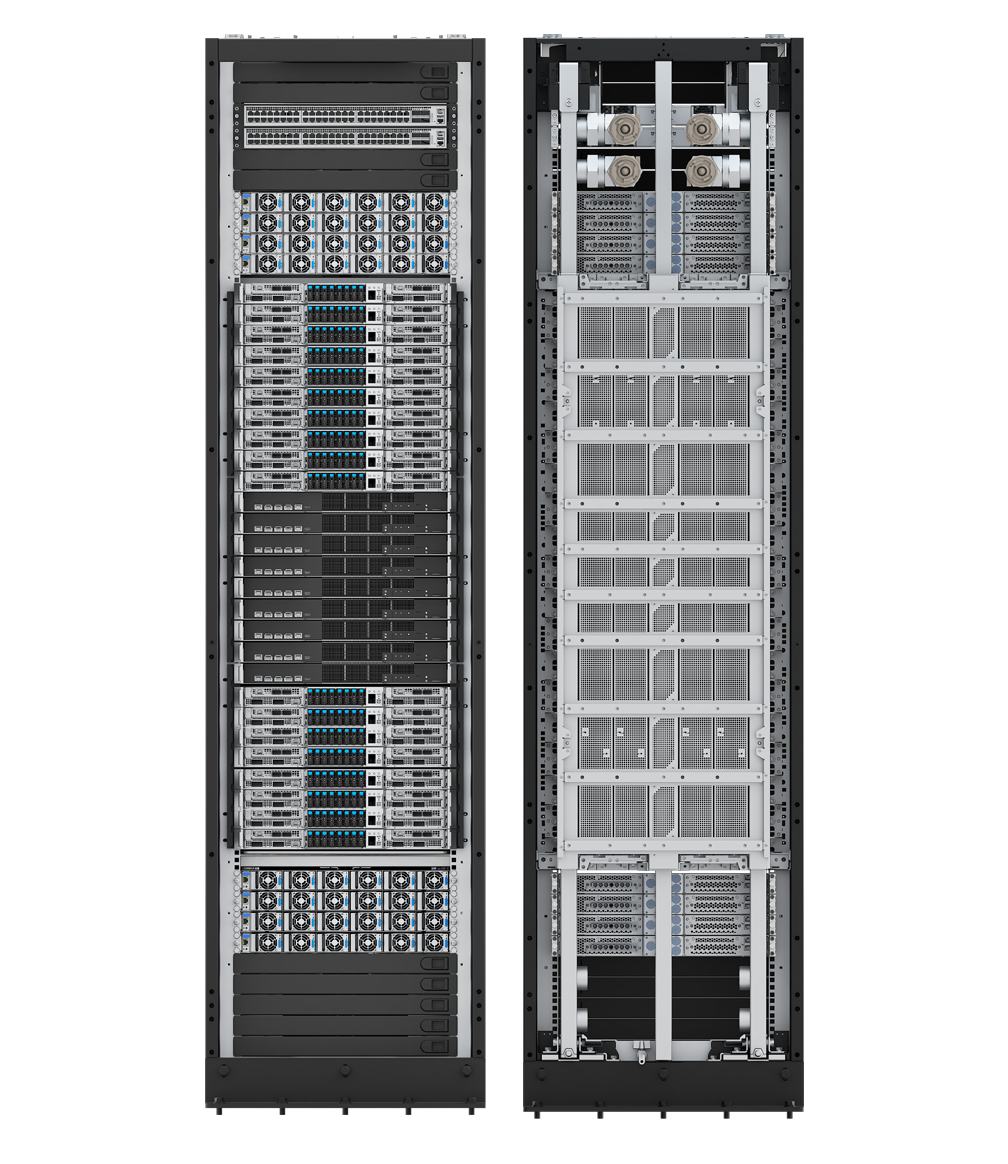

Exascale AI Rack Solutions

Large Language Model Training & Fine-Tuning, Autonomous Driving

Aivres rack-scale AI solutions feature the highest performance, industry leading accelerators to power even the largest training models with up to trillions of parameters. Build powerful AI factories with rack solutions featuring customized topologies, full-stack hardware and software, and cooling and power design tailored to specific data center conditions.

AI Rack Based on NVIDIA GB200 / GB300 NVL72

KRS8000 / KRS8500

Liquid-Cooled NVIDIA Blackwell / Blackwell Ultra Rack-Scale Architecture

- Connects 72 Blackwell Ultra GPUs and 36 Grace™ CPUs via NVIDIA® NVLink®

- Up to 288GB HBM3e memory per GPU and 1.8TB/s NVLink interconnects, acting as a single massive GPU for efficient processing

- Breakthrough speed for real-time trillion-parameter LLM inference and training using FP8 precision

- High memory bandwidth with high-speed data transfer via Grace CPU NVLink-C2C interconnect

- Whole rack liquid cooling for optimal energy efficiency

Large-Scale AI Training & Inference

Large Language Model Training & Fine-Tuning, Autonomous Driving

Deploy and scale compute as needed with modular AI Pods based on cutting-edge GPUs and accelerators to empower advanced AI workloads in the data center.

8-GPU AI Platforms

Optimized GPU Modules for Data Center AI

Supports NVIDIA HGX™ B200 / B300, NVIDIA HGX™ H200, AMD Instinct™, Intel® Gaudi®

- 5U, 6U, 9U form factors for data center deployment

- Unified systems for high performance, heterogeneous computing

- Lossless scalability to expand as needed

- Modular designs enable ease of installation, access, operation, and maintenance

- Optimal power efficiency to lower data center PUE

Enterprise, Generative AI

Recommender Systems, Image & Video Processing, Digital Twins and Simulation

Empower a wide variety of enterprise workloads with agile rack mount servers featuring flexible configurations and architectures. Seamlessly integrate and deploy AI hardware resources with Aivres’ full-stack management platform.

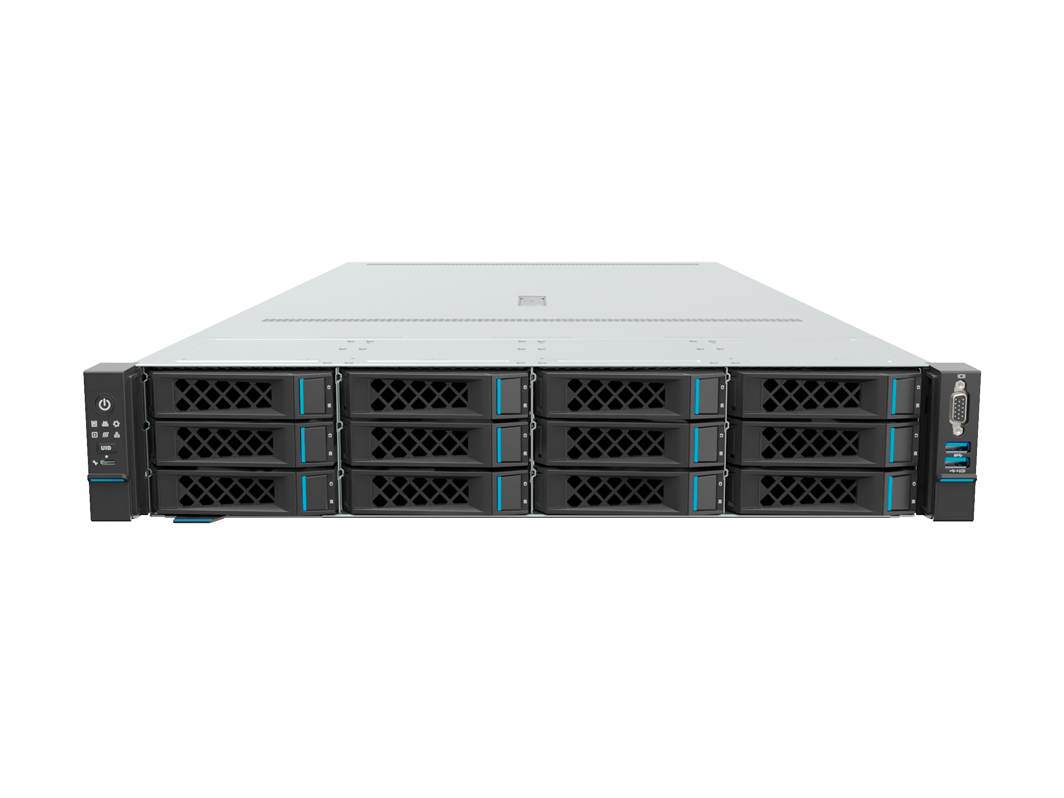

PCIe 5.0 GPU Servers

Widely Versatile AI Building Blocks

Flexible GPU Servers for Enterprise, Gen-AI

- PCIe 5.0 architecture supporting E3.S

- Delivers high-density performance up to 15 PFLOPS

- CXL1.1 supports storage-level memory expansion

- Flexible topologies and configurations to support various applications

AI DevOps Management and Scheduling Software

Aivres’ full-stack AI DevOps platform integrates GPU and data resources alongside AI development environments to streamline resource allocation, task orchestration, and centralized management, helping enterprises efficiently build and deploy AI models.

- Supports single-device sharing of GPU resources of up to 64 tasks shared per GPU

- Fine-grained allocation and partitioning of resources

- Users can dynamically request GPU resources based on GPU memory

- Reduced data cache cycle improves model development and training efficiency

- “Zero-copy” transmission strategy, multi-thread fetch, incremental data updates, training data affinity scheduling

- Integrates mainstream development frameworks including PyTorch and TensorFlow

- Supports distributed training frameworks like Megatron and DeepSpeed

- Automatic fault tolerance processes ensure fast recovery during interruptions

- Real-time performance monitoring and alerts

- Sandbox isolation mechanisms for data with higher security levels

Empowering New Possibilities with Aivres AI

Aivres AI is helping to expand horizons and make a difference in avenues like health and medicine, conservation, autonomous driving, and many more.

Enabling large models for fire monitoring, climate simulation, and water conservancy

Intelligent mass data processing to accelerate scientific research and improve medical services

Powering digital twins and edge computing to deliver immersive experiences across any medium

AI at the edge to enhance smart roads, autonomous vehicles, and driver experience

Integrating cloud, edge, and automation to revolutionize production speed and capacity

Machine learning and data analytics to improve accuracy in transactions and market prediction

Success Stories

Aivres AI solutions powering vital workloads and enabling real-world business transformations.

Accelerating data processing and research in medical institutions

Read Case Study

Aiding with harm reduction and health monitoring in livestock farms

Read Case Study