OVERVIEW

Aivres OCP Cloud AI-optimized rack solution extends the foundation of Intel® Xeon® Scalable processors with built-in AI acceleration from integrated Intel Deep Learning Boost with dual ON5293M5 OCP Accepted compute nodes connecting 8x OAMs and supporting large cluster training. With flexible GPU pooling both OAM and UBB modules are designed to universally support different types of AI accelerators and run various AI applications such as AI cloud, deep learning for training, and image recognition.

Aivres also offers an open rack solution with 8x ON5388M5 OCP Accepted JBOGs, with 2 to 4 OCP Accepted ON5293M5 compute nodes, that will accommodate various AI applications.

SERVER SOFTWARE

OCP CLOUD AI RACK ARCHITECTURE

|

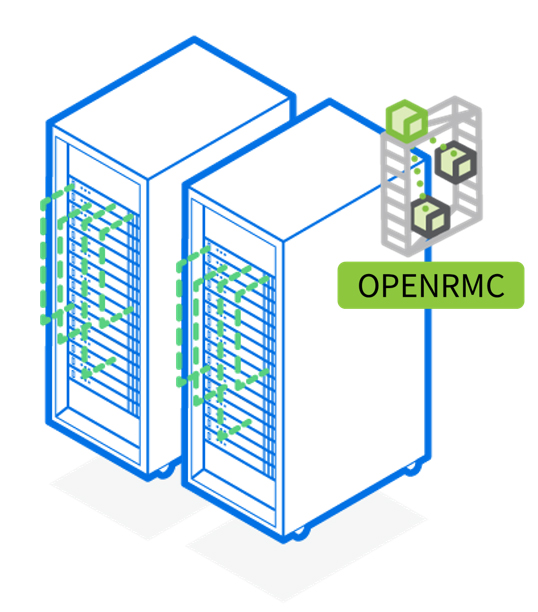

Rack-level ManagementIn order to meet the demands of customers for data center management, maintenance optimization and system design, Aivres actively launched the OpenRMC project, which can help small and medium-sized data center customers greatly reduce their IT operation and maintenance costs, simplify software-based rack management and improve efficiency. |

OCP Hardware Platform

See ON5263M5 » |

See ON5388M5 » |

See MX1 » |

|

USE CASES

Use Case 1:

OAI (Open Accelerator Infrastructure) and UBB Rack with x8 OAM

Use Case 2:

Open Rack with x8 GPU Box and x4 Compute Nodes

Hardware

| OAM | Aivres MX1 21″ OCP Accelerator Module |

|---|---|

| Server | Aivres ON5263M5 Power Efficiency Compute Node (“San Jose”) |

| Server | Aivres NF8380M5 Cloud-Optimized Server (“Olympus”) |

| JBOG | Aivres ON5388M5 NVLink GPU Expansion Box (“Mission Bay”) |