Total HBM3E Memory

Raising the Bar on Performance and Efficiency

Aivres AI systems powered by AMD Instinct™ GPU accelerators set new standards for exascale AI and HPC infrastructure in the data center. Based on Aivres’ modular building-block architecture, these advanced servers integrate the latest MI350 series 8-OAM platforms to deliver scalable, ready-to-deploy systems for the largest AI models.

Discover New AI Frontiers with

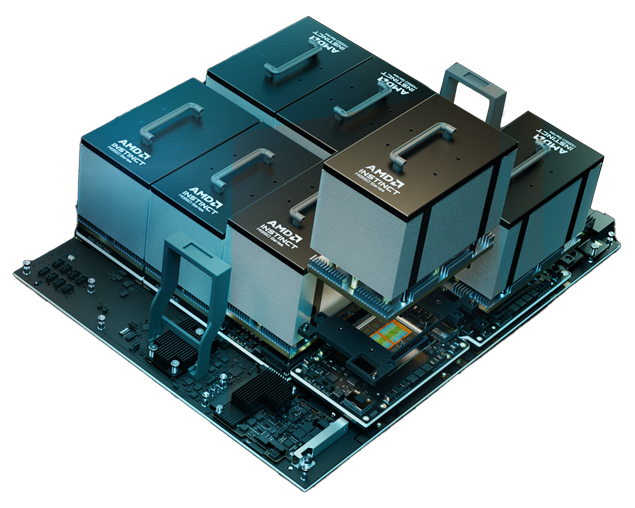

AMD Instinct™ MI350 Series 8-GPU Platform

Aivres AI systems based on AMD Instinct MI350 Series GPUs integrate 8 GPU OAM modules fully connected via 4th-Gen AMD Infinity Fabric™ links onto an industry-standard OCP design, providing a ready-to-deploy platform to accelerate time-to-market.

AMD Instinct™ AI Servers

AMD Instinct™ MI350 Series / MI325X

KR9298 10U 8-OAM Server

Powerful Air-Cooled Extreme AI Platform

- AMD Instinct™ MI350 Series / MI325X 8-GPU platform

- 2x AMD EPYC™ 9005 processors

- 2TB total HBM3e memory capacity, 8 TB/s peak memory bandwidth, TDP 1000W

- Supports up to 24 DIMMs slots, 6400 MT/s DDR5 memory in 1DPC

- 10x PCIe 5.0 x16 FHHL + 2 PCIe 5.0 x16 HHHL slots

- 12V and 54V partitioned power distribution

AMD Instinct™ MI300X

KR6298-E2 6U 8-OAM Server

Scalable Platform for Data Center AI

- AMD Instinct™ MI300X 8-GPU platform

- 2x AMD EPYC™ 9004 processors

- 1.5 TB total HBM3e memory capacity, 6 TB/s total peak memory bandwidth, TDP 1000W

- Supports up to 24 DIMMs slots, 4800 MT/s DDR5 memory

- 2x PCIe 5.0 x16 FHHL + 8x PCIe 5.0 x16 HHHL slots

- 24x SATA/NVMe drives, up to 16x NVMe U.2