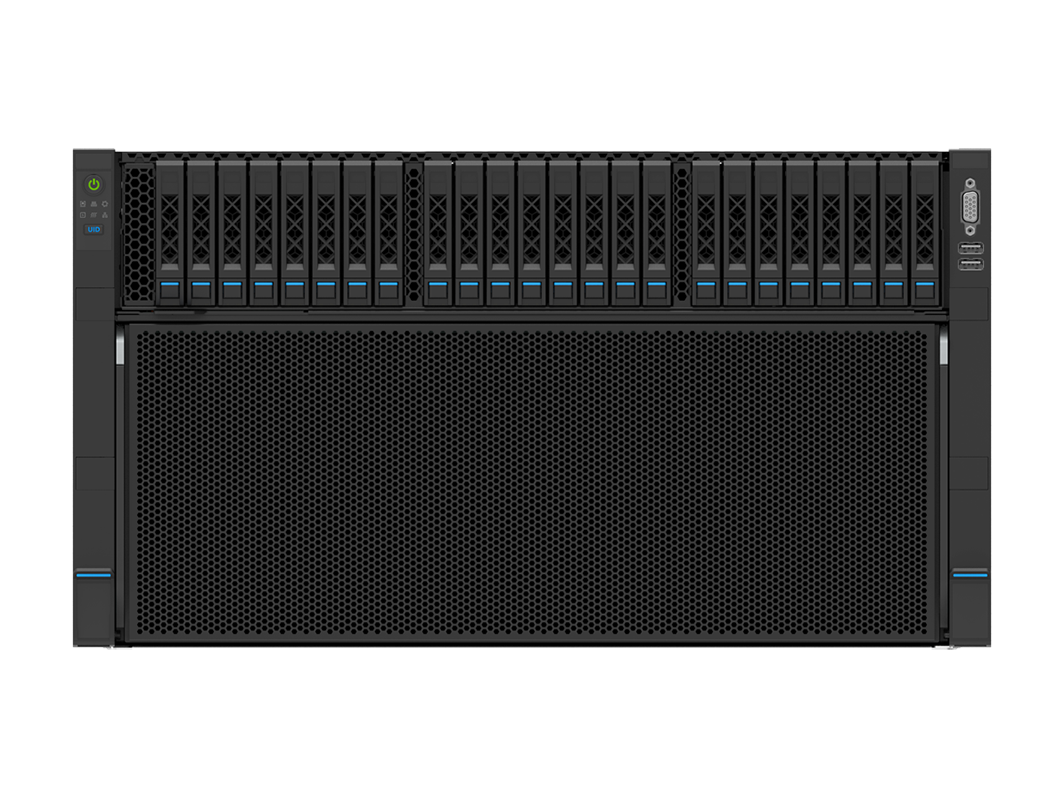

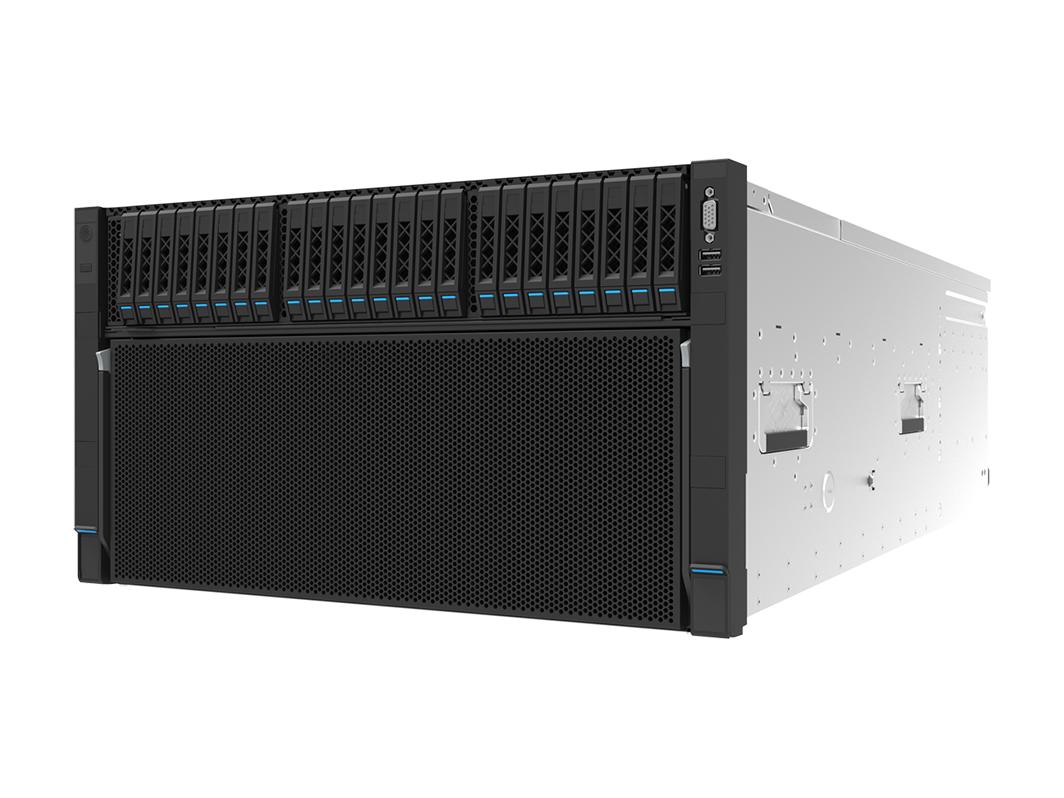

KR6288-E2

6U Extreme AI Server with HGX H200 8-GPU

- Powered by NVIDIA HGX H200 8-GPUs in 6U

- 2x AMD EPYC™ 9004

- Delivers 32 PFlops industry-leading AI performance

- Direct liquid cooling design available with over 80% cold plate coverage

KR6288-E2 with AMD EPYC™ 9004 processors is an advanced AI system supporting NVIDIA HGX H200 8-GPUs. This server delivers industry-leading 32 PFlops of AI performance and lightning-fast CPU-to-GPU interconnect bandwidth, with the H200 Transformer Engine supercharging training speeds for GPT large language models. Its optimized power efficiency and modular design with flexible configuration makes it ideal for the most demanding AI tasks in various scenarios like hyperscale data centers, AI model training, and metaverse workloads.

Unprecedented AI Performance

- Powered by NVIDIA HGX H200 8-GPUs in 6U, TDP up to 700W

- 2x AMD EPYC™ 9004 processors

- 32 PFlops of industry-leading AI performance

- H200 Transformer Engine delivers supercharged training speed for GPT large language models

Leading Architecture Design

- Lightning-fast CPU-to-GPU interconnect bandwidth

- Ultra-high scalable inter-node networking with up to 4.0 Tbps non-blocking bandwidth

- Optimized cluster-level architecture with 8:8:2 ratio of GPU to compute network to storage network

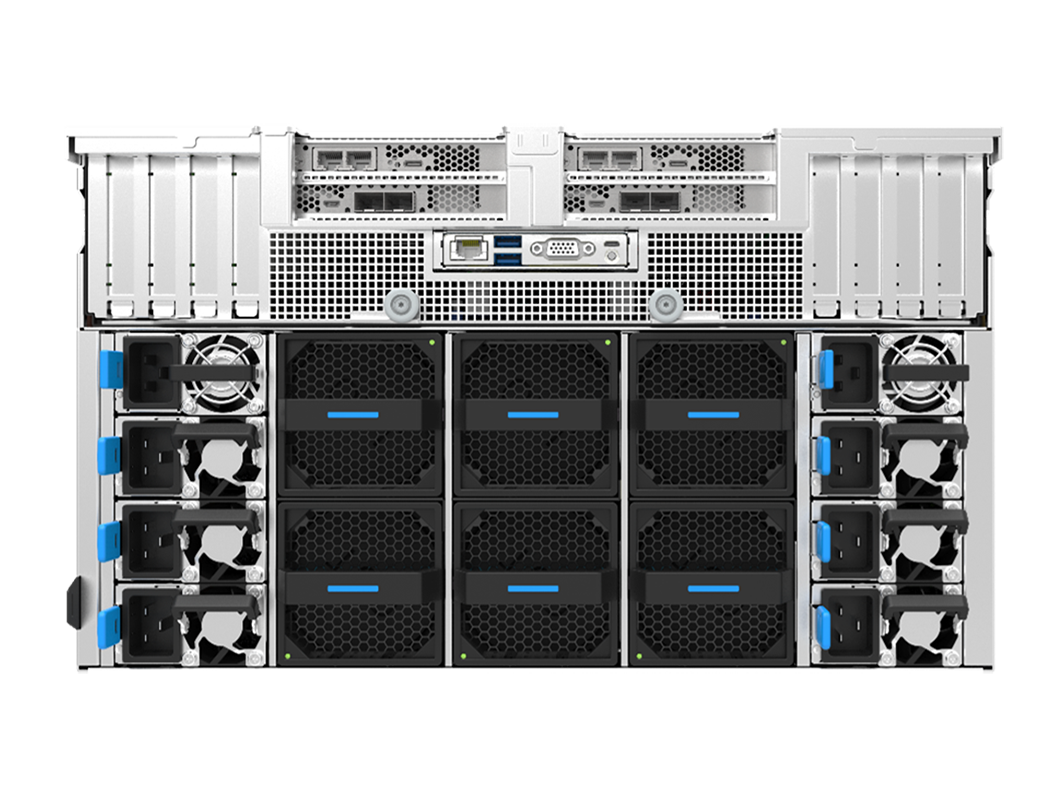

Optimized Energy Efficiency

- Low air-cooled heat dissipation overhead and high power efficiency

- 54V, 12V separated power supply with N+N redundancy reducing power conversion loss

- Direct liquid cooling design with over 80% cold plate coverage keeps PUE ≤1.15

Flexible Configurations for AI Scenarios

- Fully modular design and flexible configurations satisfy both on-premises and cloud deployment

- Easily harness large-scale model training, such as GPT-3, MT-NLG, stable diffusion and Alphafold

- Diversified SuperPod solutions accelerating the most cutting-edge innovation including AIGC, AI4Science and Metaverse

Resources

Specifications

| Model | KR6288-E2-A0-R0-00 Air Cooled |

KR6288-E2-C0-F0-00 Liquid Cooled |

|---|---|---|

| Form Factor | 6U rack server | |

| Processor | 2x AMD EPYC™ 9004, TDP up to 400W | |

| Memory | Up to 24x 4800 MT/s DDR5 DIMM, RDIMM | |

| GPU | NVIDIA HGX H200 8-GPUs, TDP up to 700W | |

| Storage | 24x 2.5” SSD: 8x NVMe U.2 + 16x SATA U.2 2x NVMe/SATA M.2 |

|

| Network Interface | 1x OCP 3.0, supports NCSI | |

| Ports | Front: 1x USB 3.0, 1x USB 2.0, 1x VGA Rear: 2x USB 3.0, 1x RJ45, 1x MicroUSB, 1x VGA |

|

| PCIe | 8x HHHL x16 + 2x FHHL x16 PCIe 5.0 slots One PCIe 5.0 x16 slot can be replaced with two PCIe 5.0 x8 slots Optional support for Bluefield-3, CX7, and various SmartNICs |

|

| Cooling | Air cooling | Liquid cooling – cold plate |

| Fans | GPU region: 6x 54V hot-swap fans with N+1 redundancy CPU region: 6x 12V hot-swap fans with N+1 redundancy |

|

| Management | DC-SCM BMC management module with ASPEED 2600 | |

| Security | TPM 2.0 (Trusted Platform Module) | |

| Power Supply | 2x 12V 3200W and 6x 54V 2700W Platinum/Titanium PSU with N+N redundancy | |

| Dimensions | 482mm (W) x 263mm (H) x 855mm (D) | |

* All configurations are subject to change without notice